Linear regression

Linear regression is a supervised learning algorithm that provides a relationship between a target variable and one or more predictors. It estimates the target values, or the values of the dependent variables based on values of the independent variables of the dataset.

Linear regression has the equation of the form,

y = mx + c

Where y is the dependent variable or the target value that is to be predicted, m is the regression coefficient or slope, x is the dependent variable or the feature value, and c is the constant or intercept.

Linear regression can be classified into two types,

In simple linear regression, there is only one independent variable, whereas in multiple linear regression, there is more than one independent variable.

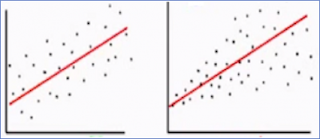

When plotting the best-fit line, also called the regression line, it is made sure that all the data points lie either on the line or very close to the line. While predicting the values for the regression line it is made sure that the data points lie at a minimum distance from the regression line. The model may misbehave and predict wrong values if there are outliers present in the data, which may have an adverse effect in determining the result. The sole aim of linear regression is to minimize the cost.

It can be used to determine the effect of independent variables on the dependent variables. For example, the knowledge you gain depends on the number of years of experience you have. In this case, the number of years is the independent variable and the knowledge is the dependent variable. As the value of an independent variable, that is the number of years, increases, the knowledge gained will also increase.

Linear regression also tells us how much the dependent variable will change if there is any change in the independent variable. For example, it can be used to determine how much profit will be made if we put some effort and money into the marketing of a product.

It is also used to predict the possible outcomes based on a new dataset that is provided as input to the model. For example, predicting the stock price for the next 1 week based on the previous trend of data. Linear regression is used for predicting outcomes and trend forecasting.

Assumptions of linear regression

- Linearity: Linearity means that in linear regression the relationship between the two variables X and Y should be linear. Here, X is the independent variable and Y is the dependent variable. It also suggests that a small change in variable X will lead to a constant change in the value of Y. This relationship can be pictorially depicted using a scatter plot.

- Homoscedasticity: Homoscedasticity means that the residuals or errors are the same for each value of an independent variable. The errors are thus equally distributed across the regression line. This can be observed by plotting the actual values of the target variable against the predicted values. It also states that the error has a constant variance. If no particular pattern is observed in the distribution of these error terms, then the data is homoscedastic, and if there is any particular pattern in the distribution of the data, then that data is termed as heteroscedastic.

- Independence: Linear regression requires that there should be no relationship between two observations. If the observations are dependent on each other then the accuracy of the model is reduced. An example of dependent observation is time series where the measurement of today is near to the measurements of yesterday. If observations are correlated, then any change in one of the values will affect the model drastically, and the model efficiency will decrease.

- No multicollinearity: Linear regression assumes that the independent variables should have no correlation or very little correlation with each other. The correlation between the variables can be calculated with a correlation matrix and can be graphically represented using a heat map or a pair plot. If there exists a correlation between variables it is difficult to determine which variable has a major effect while predicting the values. If the independent variables are strongly dependent on each other, it might weaken the estimation power of the model, and we might not obtain accurate data.

- Normal distribution of observations: The error terms or residuals follow a normal distribution. If the data is not normally distributed, then it leads to difficulty in predicting the coefficient. If the data is not normally distributed, then we need to study the data more carefully to increase the efficiency of the model. If the sample size is very large, then this assumption is not required because the coefficients will follow normal distribution, and in turn, the non-normality of the error will not have an adverse effect. The normal distribution is graphically represented using a q-q plot.

Evaluation metrics

Evaluation metrics find the difference between the predicted value and the actual value. Lowering the value of metrics better the accuracy of the model.

- Mean squared error (MSE):

The mean squared error is calculated by taking the average square of the difference between predicted and actual values. It is written as follows,

- Mean Absolute Error (MAE):

MAE is calculated by taking the average of the sum of the absolute value of the error. The error is calculated by subtracting the predicted value from the actual value.

- Root mean square error (RMSE):

It is calculated by taking the square root of MSE. It is more commonly used because the value obtained by using MSE can be too large to compare. The formula for RMSE is calculated using the below formula,

Let’s predict Boston housing rates using linear regression.

- Importing the libraries

We use pandas for analyzing the data and matplotlib to visualize the data.

import numpy as np import pandas as pd import matplotlib.pyplot as plt import seaborn as sns import warnings warnings.filterwarnings('ignore')- Loading the dataset

sklearn library comes with a few toy datasets. We will use the Boston Housing dataset to predict the housing rates using linear regression.

# Load dataset from sklearn.datasets import load_boston boston =load_boston() df_boston = pd.DataFrame(data = boston.data, columns= boston.feature_names) df_boston.head()Output:

- Splitting dataset into train and test set

In this step, the feature and the target values are separated and divided into the train dataset and the test dataset.

# Slice the dataframe into features and target df_boston_features = df_boston.iloc[:, :-1] df_boston_target = df_boston.iloc[:,-1:]# Spliting dataset into training and testing data

from sklearn.model_selection import train_test_split X_train X_test, Y_train, Y_test = train_test_split(df_boston_features, df_boston_target, train_size = 0.8)- Building the model

Here, the model is instantiated and then trained using the train set.

# Importing library from sklearn.linear_model import LinearRegression# Initialize the model linear = LinearRegression(fit_intercept= True)# Fit the model to train set linear.fit(X_train, Y_train)- Predicting the target values

# Predict values linear_pred = linear.predict(X_test)- Retrieving the intercept

# Printing intercept print(linear.intercept_)

- Retrieving the slopes

# Printing coefficients print(linear.coef_)Output:

- Visualizing the best-fit line

#Visualize the linear regression on testing datasetplt.figure(figsize=(12,6)) plt.scatter(Y_test, linear_pred) plt.plot(Y_test, Y_test, color = 'r') plt.ylabel('Predicted House Rate') plt.xlabel('Actual House Rate') plt.show()Output:

- Evaluation metrics

# Evaluating the prediction with metrics from sklearn.metrics import mean_squared_error, mean_absolute_error MSE = mean_squared_error(Y_test, linear_pred) MAE = mean_absolute_error(Y_test, linear_pred) RMSE = mean_squared_error(Y_test, linear_pred, squared=False) print("The mean squared error (MSE) is : ", MSE) print("The mean absolute error (MAE) is : ", MAE) print("The mean root squared error (RMSE) is : ", RMSE)Output:

Click here to get access to the complete code.

Click here to view other topics that might excite you.

.png)

Comments